I’ve recently been having fun integrating home automation throughout my home. Currently I have a Google Home, and two Google Home Minis. Until now, I’ve just been asking simple questions, setting up reminders, turning lamps on and off, etc, but now I want to start creating custom Google Home Actions myself. The Actions I want to create are Actions that will store and update data in a data store so I can get it back out later with another Action, or build a user interface to view the data we’ve just stored.

In this post we’re going to build a simple Google Home Action that enables us to tell our Google Home who won a game, and what game they won and store this information in Azure.

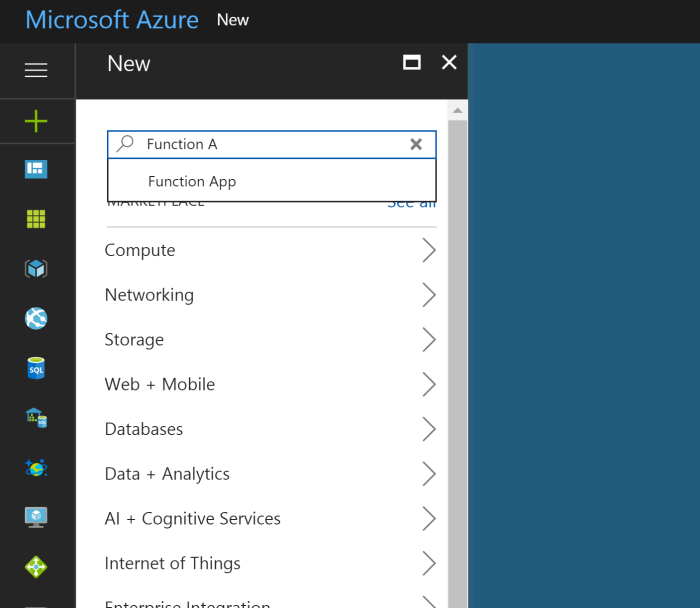

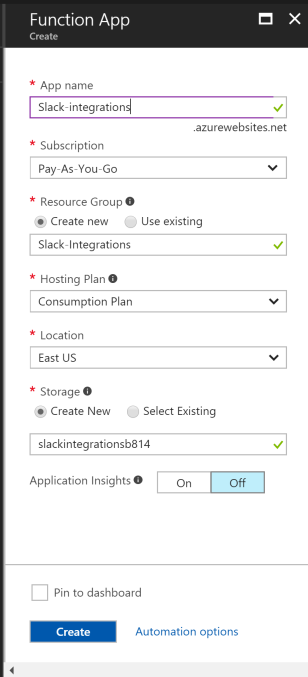

Really all I need is an API endpoint and somewhere to keep the data. For this, I’ve chosen Azure Functions + Azure Table Storage. Azure Functions are extremely simple, lightweight, cheap ways to stand up an API endpoint, so we’re going to use them for this example.

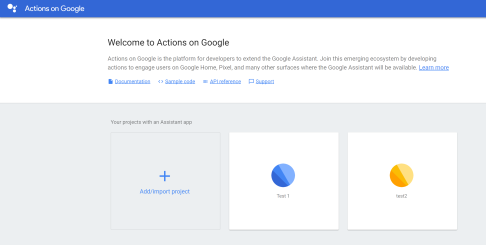

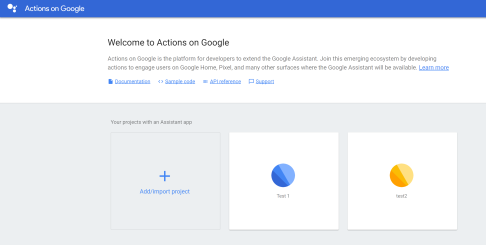

First, I needed to understand how to even create a Google Home Action. I found the Actions Console, here and signed in with the same account I have linked to my Google Homes.

Next, we Add/import project, give it a name and create!

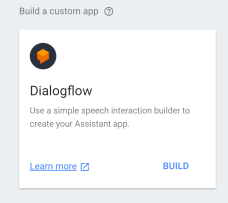

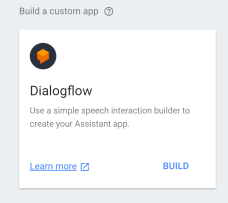

For this demo, we’re going to create a Dialogflow App. You can check out more of what a Dialogflow app consists of here. Essentially, Dialogflow apps are a more conversational type of Action where you can ask questions or give commands.

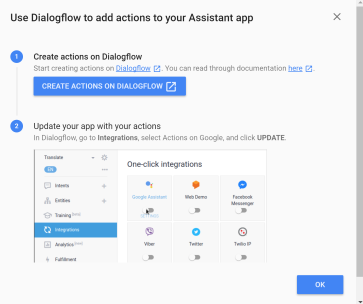

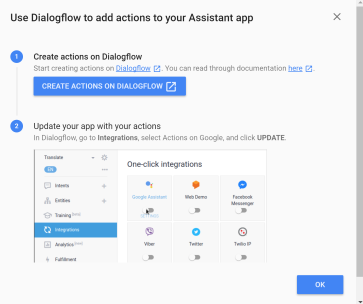

After you click Build you’re going to want to select Create Actions on Dialogflow.

This will take you to Dialogflow, which used to be API.AI, but renamed after Google purchased it. Here is where you will design how the conversation will flow, and where you will hook it up to our Azure Function.

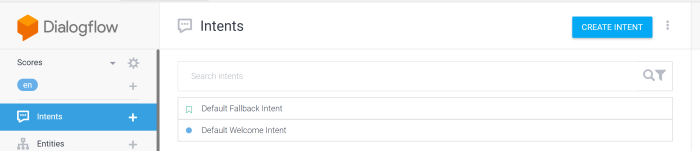

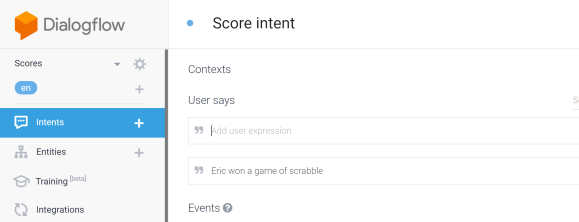

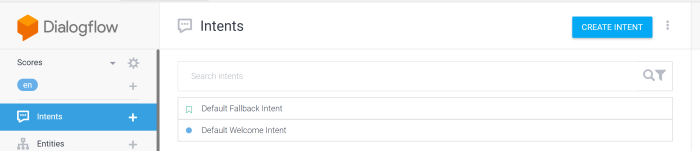

Create a Dialogflow project, and begin creating something called an Intent.

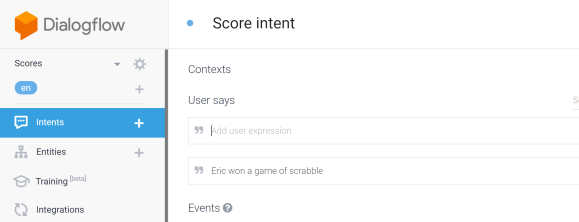

For this Action, I want to keep track of who won a game and the date in which we played it. So, for an expression if I say Eric won a game of Scrabble, I want to add an entry to our Azure storage. To do this we type that example above in to the Contexts section.

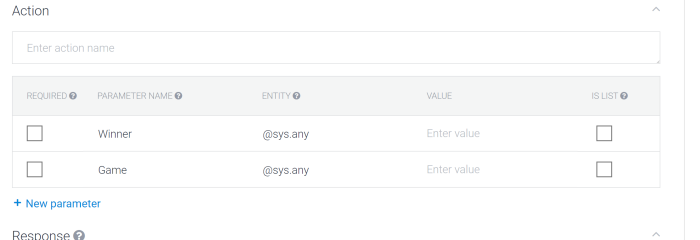

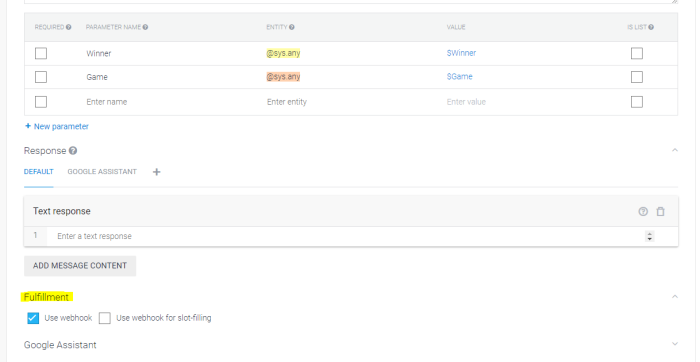

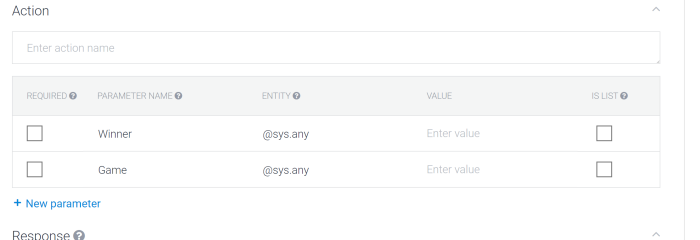

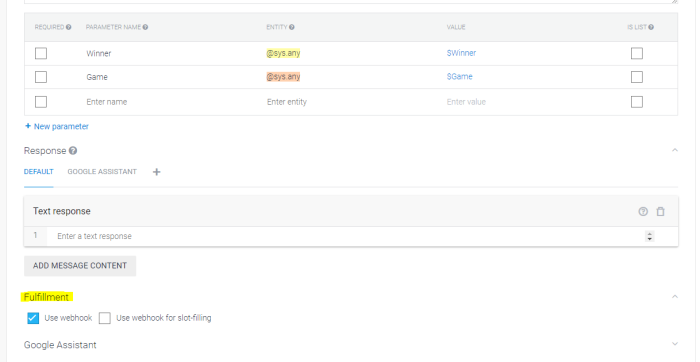

Now we need to create some parameters to pull out of our expression. In our case, we need two parameters; Winner and Game. Be sure to set the Entity to @sys.any.

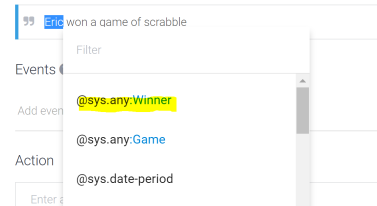

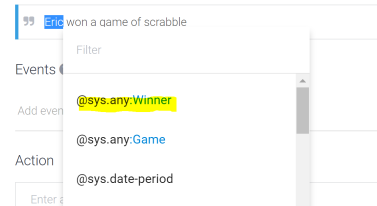

Once we have the parameters created we can actually use this interface to assign words or segments, from the expression we created, to these parameters to be passed in the request payload. Simply highlight the word and it will bring up a dialog for you to be able to select one of the parameters you want to set it to. Do this for both Winner and Game.

These parameters are what will be sent in the parameters object of the request, which I’ll describe below.

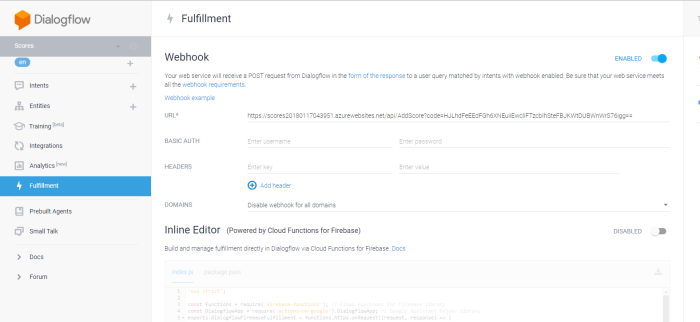

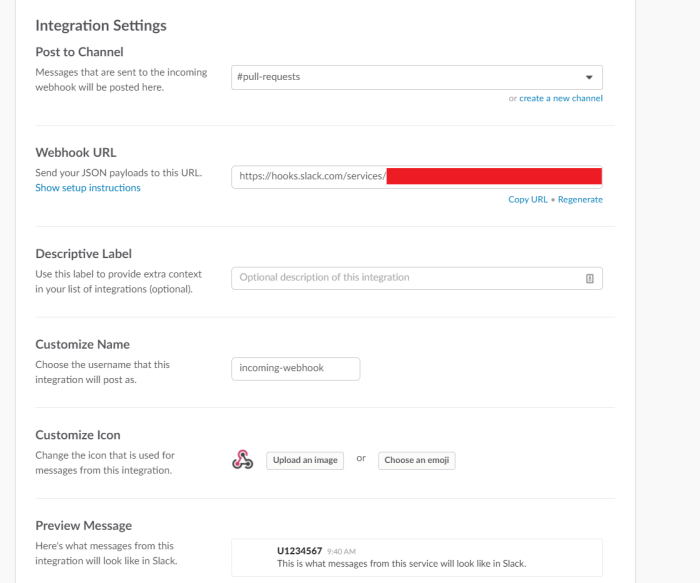

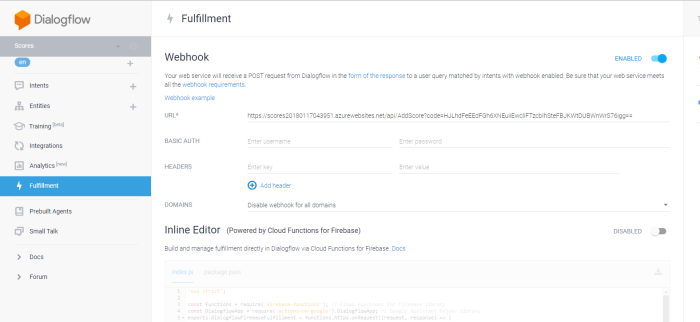

Great, we have the parameters we need for the request….but how do we actually call our Azure Function? Well we need to set up two more items, Fulfillment and Integrations. For Fulfillment, click on the Fulfillment menu option in Dialogflow. In here, we need to Enable the Webhook option and provide the URL. In this instance our webhook URL is going to be the URL of our Azure Function. After you’ve enabled the webhooks and added the Azure Function URL make sure you click Save!

Once you’ve enabled the Webhook/Fulfillment, we need to go back in to our Intent. You’ll now notice a Fulfillment option that you can expand and can use to enable the webhook for this intent.

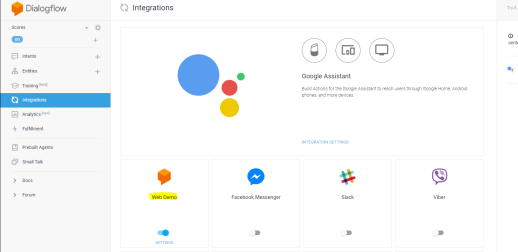

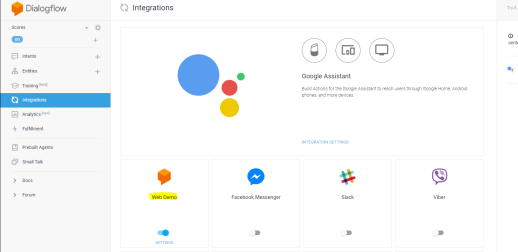

Finally, we need to enable the Web Demo integration in the Integrations tab in Dialogflow. When we do this, it gives us the ability to test this in a web application which is very useful.

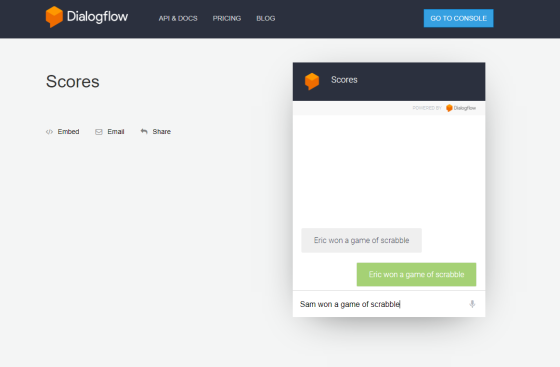

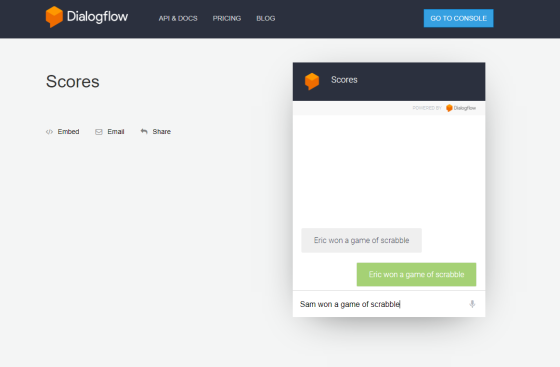

In the web application, we can type a command and it will send it to our Webhook, which is our Azure Function! We can type commands, questions, etc and get responses back.

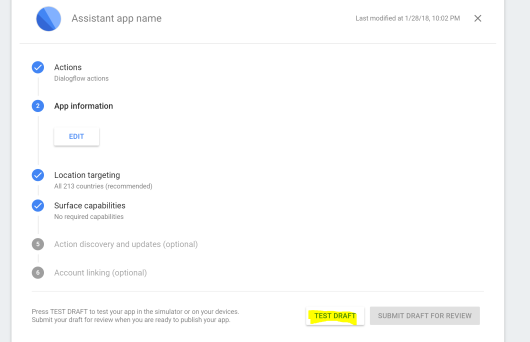

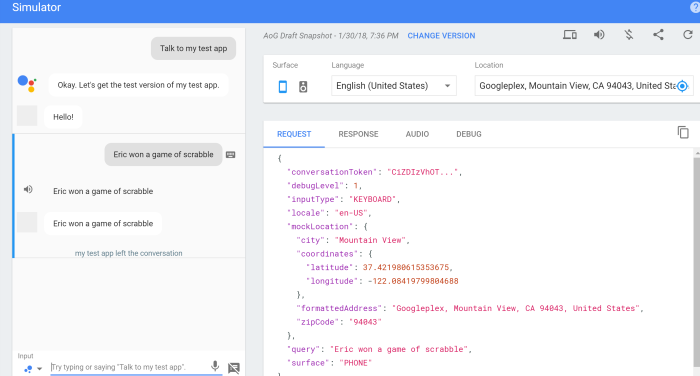

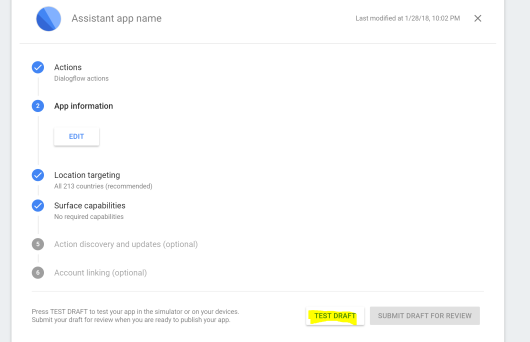

Even cooler, we can actually test this with our Google Home! In order to do this, you can go to the Actions console, select our Action, and click Test Draft.

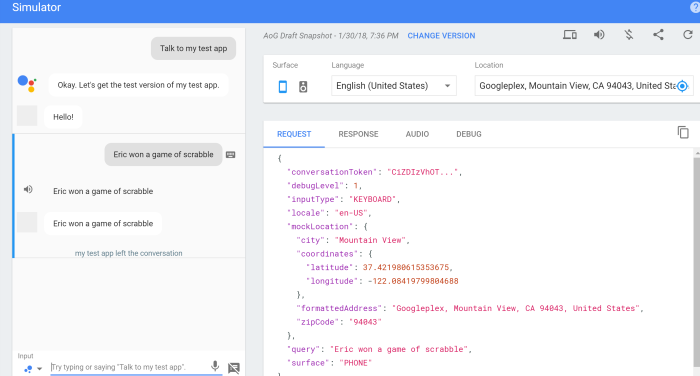

Doing this will bring up a simulator. Here you can talk to your test app by typing commands in like the screenshot below, or you can actually talk to your Google Home (which will be in a demo on Function Junction soon!).

In order to use you Google Home, you can just say:

“Hey, Google Talk to my test app”

The Google Home will reply with whatever you’ve set your initial response to be.

Then you can say: “{Your-name} won a game of {game}”

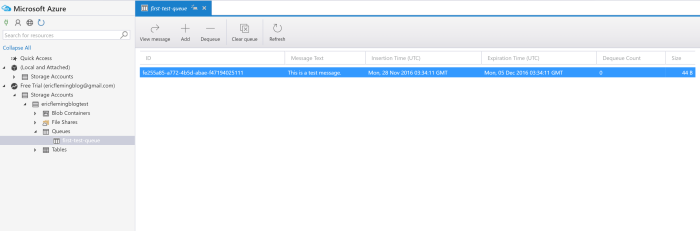

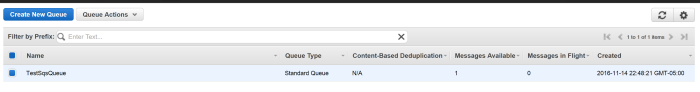

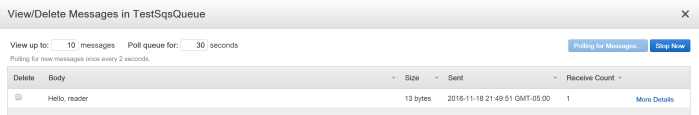

You’ll then see it show up in your Azure Data store!

A quick note, your Google Home has to be hooked up to the same account that you’re creating your Google Home Actions with in order to test in this manner. I’ll be sure to post the Function Junction episode here after we’ve recorded it.

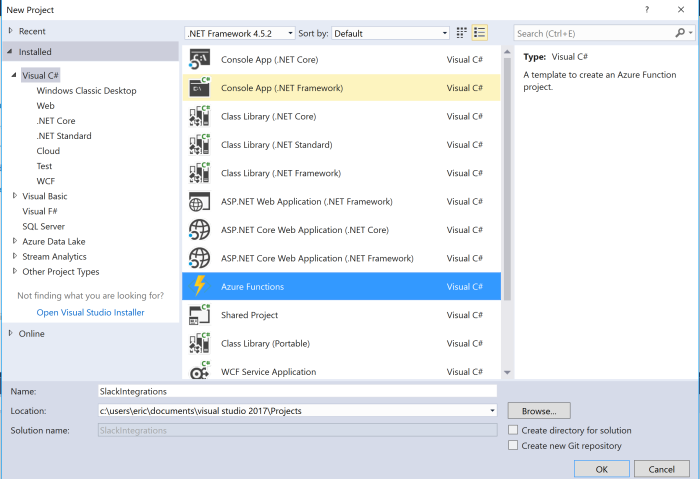

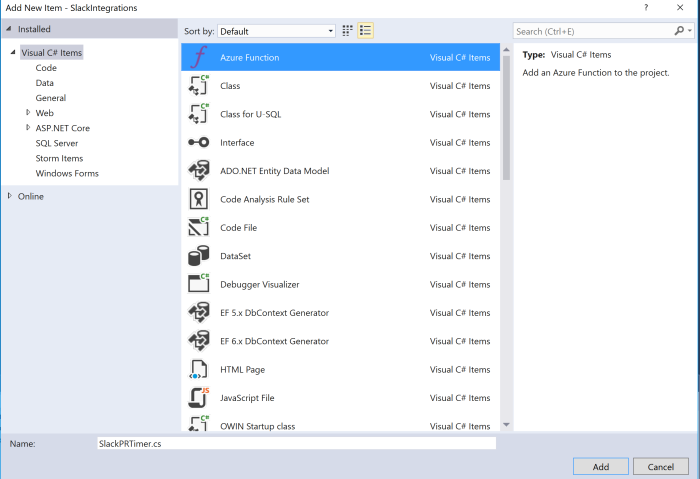

Oh yea, the Azure Function!

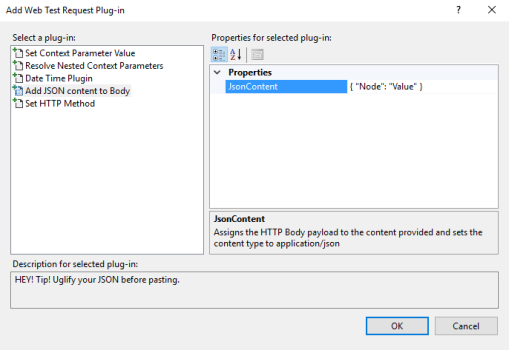

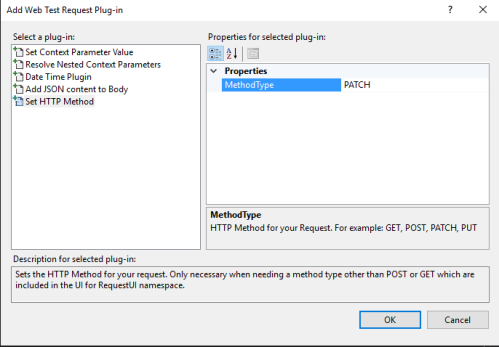

We’ve spent so much time about setting up the Action, I almost forgot to show you my simple Azure Function for all of this! First of all, it helps to know what the format of the request looks like – this took me a few minutes to find. The entire Dialogflow webhook format can be found here. For this example, we really only care about the parameters object. I created some POCO objects for my Azure Function to use.

public class GoogleHomeRequest

{

public GoogleHomeResult Result { get; set; }

}

public class GoogleHomeResult

{

public GoogleHomeParameters Parameters { get; set; }

}

public class GoogleHomeParameters

{

public string Winner { get; set; }

public string Game { get; set; }

}

We’ll then be able to deserialize the incoming request and use the parameters in the code.

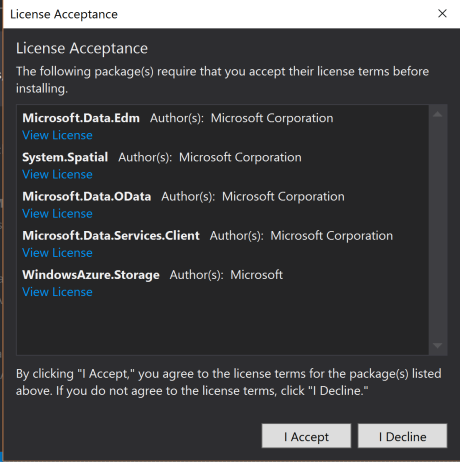

I’m using Azure Table Storage in this Function, but you can use any type of Azure Data storage you’d like! The attribute bindings make wiring up the different types of storage really simple.

Now, we have the parameters from the Action’s request and we’ve put some logic around them to check if they exist and if they do, we add a record to Azure Table Storage. But we need to send a response back to the Action. The action needs three items:

Speech – What the Google Home will say back to you

DisplayText – What a device will display back to you

Source – This will be set to webhook for this example

The response object will then look like this:

public class Response

{

public string Speech { get; set; }

public string DisplayText { get; set; }

public string Source { get; set; }

}

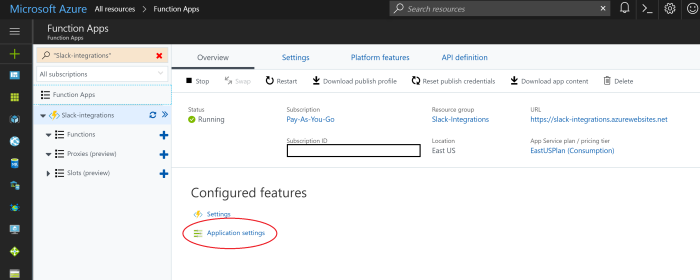

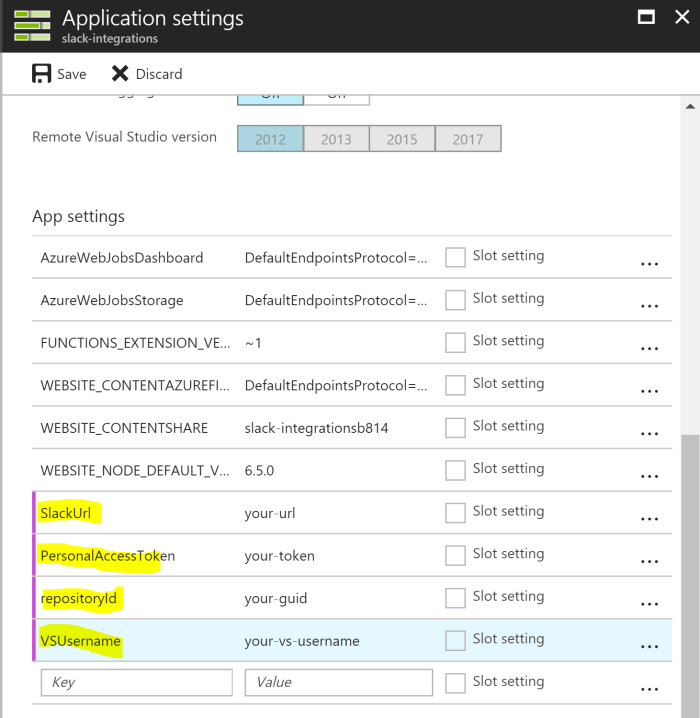

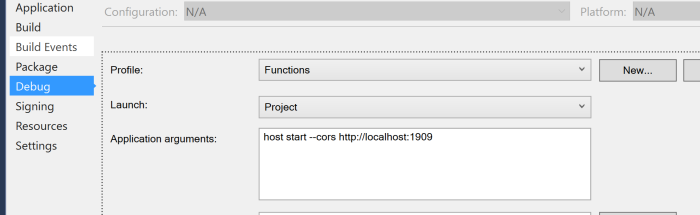

Here’s my full Azure Function! If you’re not too familiar with Azure Functions, make sure you set up your TableConnection in your Azure Function app settings when you deploy it. If you need help with deploying it, you can also check out some more Function Junction episodes on YouTube!

using System;

using System.Net;

using System.Net.Http;

using System.Threading.Tasks;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.Azure.WebJobs.Host;

using Microsoft.WindowsAzure.Storage.Table;

namespace Scores

{

public static class AddScore

{

[FunctionName("AddScore")]

public static async Task<HttpResponseMessage> Run(

[HttpTrigger(AuthorizationLevel.Function, "get", Route = null)]HttpRequestMessage req,

[Table("Games", Connection = "TableConnection")]ICollector<GameStat> gameStat,

TraceWriter log)

{

log.Info("C# HTTP trigger function processed a request.");

var googleHomeRequest = await req.Content.ReadAsAsync<GoogleHomeRequest>();

var googleHomeParameters = googleHomeRequest.Result.Parameters;

var response = new Response();

if (!string.IsNullOrEmpty(googleHomeParameters.Winner) && !string.IsNullOrEmpty(googleHomeParameters.Game))

{

var now = DateTime.Now.ToLocalTime();

var newStat = new GameStat

{

GameName = googleHomeParameters.Game,

Winner = googleHomeParameters.Winner,

Date = DateTime.Now.ToLocalTime(),

PartitionKey = googleHomeParameters.Winner,

RowKey = googleHomeParameters.Game + Guid.NewGuid().ToString()

};

gameStat.Add(newStat);

response.DisplayText = $"{googleHomeParameters.Winner} won a game of {googleHomeParameters.Game}";

response.Source = "webhook";

response.Speech = $"{googleHomeParameters.Winner} won a game of {googleHomeParameters.Game}";

}

return req.CreateResponse(HttpStatusCode.OK, response);

}

}

public class GoogleHomeRequest

{

public GoogleHomeResult Result { get; set; }

}

public class GoogleHomeResult

{

public GoogleHomeParameters Parameters { get; set; }

}

public class GoogleHomeParameters

{

public string Winner { get; set; }

public string Game { get; set; }

}

public class Response

{

public string Speech { get; set; }

public string DisplayText { get; set; }

public string Source { get; set; }

}

public class GameStat : TableEntity

{

public string GameName { get; set; }

public string Winner { get; set; }

public DateTime Date { get; set; }

}

}